Psychoacoustics seems like a summary scientific concept, however it comes into play whenever you listen to music.

Listening to a track appears simple enough, however, the best way you expertise your personal songs and mixes is far from goal.

The techniques in your brain and sensory organs that flip vibrating air patterns into music are full of unusual quirks.

Figuring out the basics of how they work can help you deal with a number of the most fundamental processes in mixing.

In this article, I’ll clarify the basics of psychoacoustics and present why it affects your music.

What’s psychoacoustics?

Psychoacoustics is the science of how people perceive and understand sound. It contains the research of the mechanisms in our bodies that interpret sound waves in addition to the processes that happen in our brains once we listen.

That may sound utterly tutorial, however, some psychoacoustics phenomena have a huge impact when it comes to music manufacturing—especially mixing and mastering.

I’ll go through an important psychoacoustics concept for music producers, clarify why they matter, and the way understanding them can help you make better music.

Music perception and cognition

Psychoacoustics is divided into two main areas—perception and cognition.

Perception deals with the human auditory system and cognition focus on what occurs in the mind.

The two techniques are tightly linked and affect each other in many ways.

Let’s begin with perception.

Human hearing range

Your experience of sound and music can be completely different in case your senses worked like audio measuring equipment.

For starters, your body’s auditory system can solely process sound waves inside a certain range of frequencies.

That range spans from 20 Hz to 20 kHz

Frequencies beneath 20 Hz cease to seem like a unified tone and change into more like a series of pulses. Frequencies above 20 kHz disappear entirely—in case you’re fortunate enough to get that far.

20 kHz is the upper limit for essentially the most sensitive human listeners. Most adults drop off much earlier at around 16 or 17 kHz.

That range could appear wide, however, there’s loads of audio activity that happens at frequencies we’ll never experience.

For instance, the echolocation techniques of bats and dolphins can detect frequencies of over 100 kHz—imagine what that sounds like!

Equal loudness contours

Even within the 20Hz-20kHz range, your hearing isn’t a tidy linear system.

Some frequencies appear more intense than others—even when they’re precisely the identical decibel stage.

The explanation of why has to do with the structure of your inner ear.

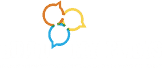

After a sound enters your ear, it travels by an organ known as the cochlea that appears a bit like a rolled-up garden hose.

The sound waves excite small hair cells referred to as stereocilia that line the cochlea’s interior.

These cells send the electrical messages your brain interprets as sound and music. However, the hair cells themselves aren’t evenly distributed throughout the cochlea.

They’re clustered up within the areas that assist us process the commonest sounds in the environment. For instance, critical ranges like the upper midrange where human speech happens seem naturally louder to us.

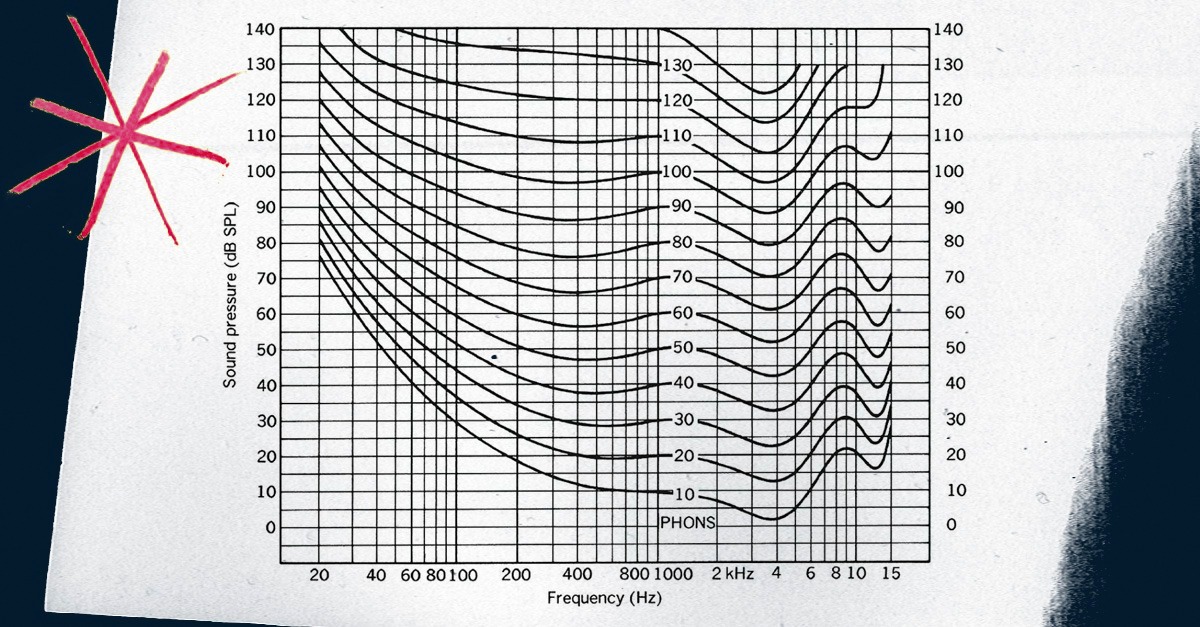

To attempt to make sense of those variations, researchers created graphs to elucidate how frequency and SPL are related in our perception.

These charts are referred to as equal loudness contours, or sometimes Fletcher-Munson curves after the primary researchers to work on them.

Every point along one curve on the graph will appear to be the identical loudness.

You possibly can simply see follow the development along the line to see which parts of the spectrum get emphasized by your perception system.

In mixing, this explains why pushing the upper midrange frequencies only some dB louder with your EQ plugins could make such a drastic difference.

Auditory masking

Even with a single sound source, your perception system has an enormous effect.

However, begin layering sounds together and it gets even more sophisticated.

When a number of sounds are performed at the same time, a psychoacoustic phenomena known as masking comes into play.

Masking explains why it’s so hard to obviously hear the timbre of two sounds with overlapping frequencies.

Each sound is unique in the air. However, in your inner ear, they excite the identical areas of your hair cells in the event that they reach the cochlea at a similar time.

In case they’re shut sufficient, you can’t inform them aside.

Your auditory nerve needs a minimal frequency difference between two sounds to process them individually.

Masking is one of the main causes equalization is used so often in mixing. Most of the signals you’ll be adding together at your master bus have frequency vitality in common areas.

To EQ a track effectively you must reduce frequencies that don’t contribute to the role of a sound in your mix, whereas emphasizing those that matter.

Sound localization

If that weren’t enough, your auditory system additionally impacts the way you locate sounds around you in space.

The method is known as sound localization and it helps you situate the sources of various sounds in your environment.

Sound localization depends on a number of different factors to help you determine where a sound is coming from.

The first is the physical distance between your ears themselves.

Since human ears are positioned on both sides of the head, sound from different directions reaches the left and proper ear at barely different instances and intensities.

Your mind uses these variations, together with other cues from the tone and timbre to find out details about the origin.

This process is what makes it possible to use panning in your mix to create a nice, wide stereo image.

Cognition

Music cognition will get an entire lot more complicated. Your brain has highly effective sample recognition techniques that enable you to interact with the language of music.

Why does a 2:1 frequency ratio produce an octave? Answering some of these fundamental questions would take an advanced university degree, so I won’t get into it here.

Even so, there are some easy principles in music cognition to know that will help you produce music better

For instance, cognitive psychoacoustics present up in situations where a listener’s internal bias impacts how they consider different components in the production process.

I’m talking about situations like selecting between cheap or expensive gear, or different audio file formats.

For some controversial equipment like boutique cables, even accomplished mix engineers can’t tell the sonic distinction between cheap and expensive in blind scientific tests.

Nevertheless, when the individuals are told which is which, many individuals select subconsciously in accordance with their internal bias.

Getting over your biases in mixing is difficult, nevertheless, it’s one of the essential parts of changing into a great producer.

Sonic experience

It’s clear that your physique and your brain work together to create your experience of music.

These puzzling fundamental questions aren’t important to your everyday life as a musician. However, they will help you be more informed about the work you do.

Now that you understand a number of the basic concepts in psychoacoustics, get back to your music, and see how they apply.